MAVIS (the MCAO Assisted Visible Imager & Spectrograph), to be installed on ESO’s VLT, will be driven by a high performance real-time control (RTC) system relying on cutting edge hardware and software technologies, including the hard real-time pipeline as well as the supervisory and tightly coupled telemetry sub-systems. To meet the extremely challenging requirements of a complex instrument like MAVIS, this forward looking implementation of the COSMIC platform is designed to support, end-to-end, a wide range of control schemes, from classical model-based approaches up to modern data-driven methodologies.

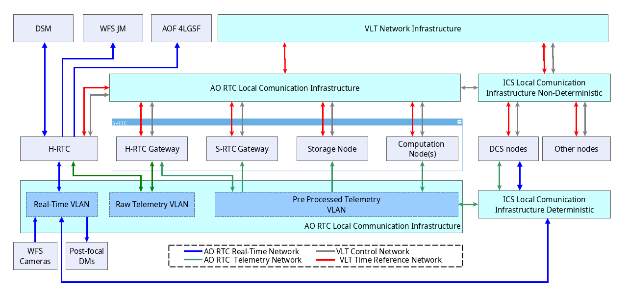

The HRTC is composed of a single node which receives sensors frames and publishes real-time telemetry through the AO RTC Internal Communication Infrastructure. It is also plugged to the Deformable Secondary Mirror (DSM), the 4 Laser Guide Stars Facility (4LGSF) steering mirrors and Jitter Mirrors (JM) through dedicated interfaces.

The SRTC is split into 4 components: · HRTC Gateway: Receive real-time telemetry from the HRTC and broadcast it to other SRTC components · SRTC Gateway: · Receive commands from the Instrument Control System · Monitor and control all the processes on the SRTC · Collect metadata for archive purpose · Storage Node: Store useful telemetry data for archive and post-processing purpose · Computation Node(s): Execution of all the SRTC Data Tasks

The MAVIS HRTC module has to drive both the DSM and 4LGSF Mirrors using legacy SFPDP links as well as the LGS Jitter Mirrors through Ethercat (1GbE copper link). As a baseline, the μXLink board from Microgate will be used to perform low latency data transfer from the HRTC module. The development of this board has been done in the context of the Green Flash project and it is now a COTS component available from Microgate. It is an interconnect board with an ARM processor implemented together with the FPGA on the same silicon to form a system on chip (SoC). It is based on an Intel System-on-Chip FPGA ARRIA 10 SX 660 with embedded ARM Cortex-A9 dual-core microprocessor. An image of the µXLink board can be seen below.

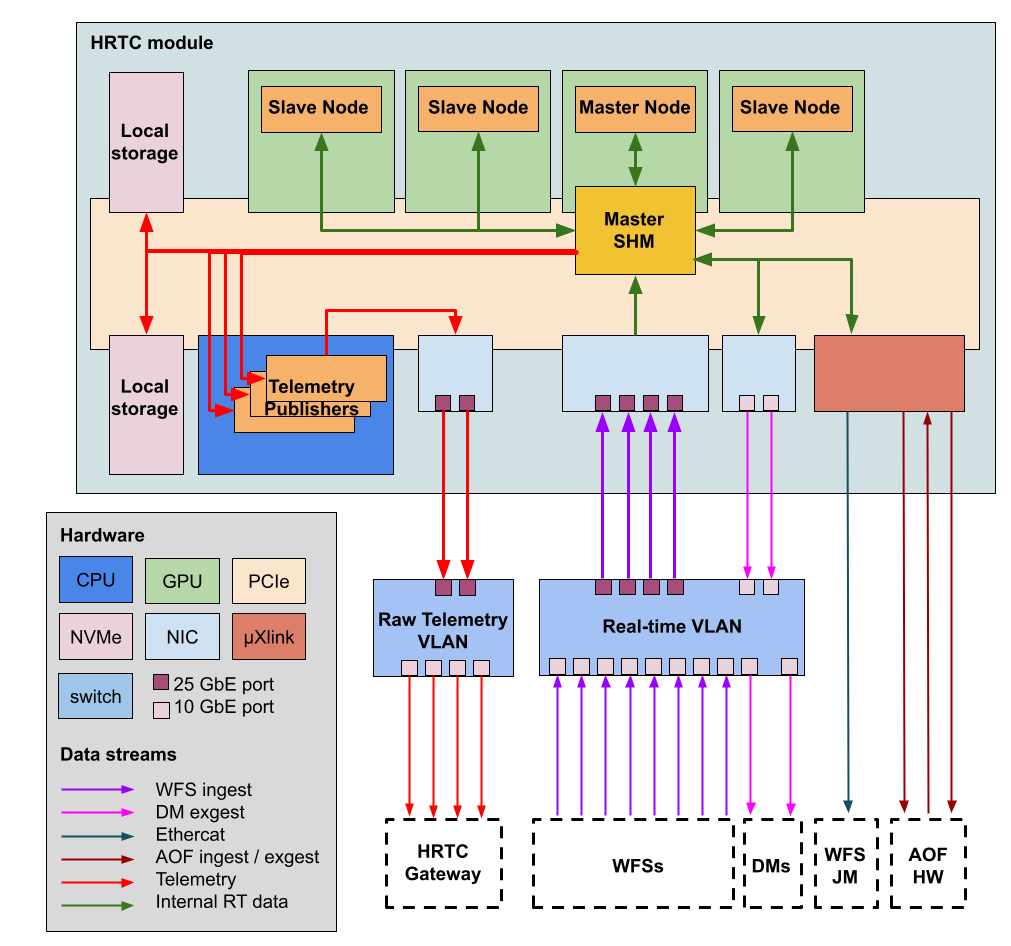

Considering the tomographic reconstruction as the key specification driving the dimensioning of the real-time pipeline in terms of compute throughput and the maximum latency figure, the corresponding Matrix Vector Multiplication (MVM) implementation leads to a memory bandwidth requirement better than 2.0 TB/s. With at least 2 NVIDIA A100 GPUs (1.2TB/s sustained for each) this memory bandwidth requirement should in theory be fulfilled. We have considered integrating up to 4 GPUs in the HRTC system so as to provide contingency. These GPUs could either be used during operation to provide extra compute power and bring the overall latency down or as spares in the final system. The figure below provides details on the different datapaths in the HRTC module.

Real-time data from the sensors / to the actuators are ingested / output through a combination of Mellanox ConnectX-6 NICs and a µXlink board for legacy hardware. Data is directly written on GPU shared memory to make them available for all GPU computing processes. To/from the NICs, direct GPU memory access is enabled using DPDK which includes a library developed by NVIDIA, gpudev, that allows for copying the packets directly in the GPU memory using GPUDirect and DPDK mbuf structure. Once all the packets have been received, the GPU is notified and re-order the packets to have the full WFS frame available for computations. To / from the µXlink board, this is enabled using gpudev as well, together with a custom firmware / driver for the µXLink, developed in collaboration with Microgate. Potential packets re-ordering is also directly enabled onboard.

Real-time data streams are converged onto the shared memory of a Master GPU. Parts of the pipeline, including the core MVM computations, are distributed over multiple GPU, using the Master GPU SHM as a hub for data distribution and gathering. Telemetry data streams are transferred from the Master GPU SHM to CPU process in charge of publishing them to the outside world.